TargetJura - engaging in dialogue with an AI

The use of artificial intelligence (AI) has become an integral part of everyday life and has the potential to fundamentally change many aspects of society. Especially in the recent past, AI has developed rapidly and has achieved impressive successes. For example, its superhuman competencies in games or in many quiz shows are worth mentioning. Google and other search engines as we know them today would be unthinkable without the use of AI. It also enables personalized advertising, can recognize, output and translate language at a very high level, performs impressively in image recognition and much more. It seems like it's only a matter of time before AI surpasses humans in decision making as well. What was once considered science fiction is now within reach. But do we even want to reach for it? What is society's attitude toward the increasing, almost escalating use of AI?

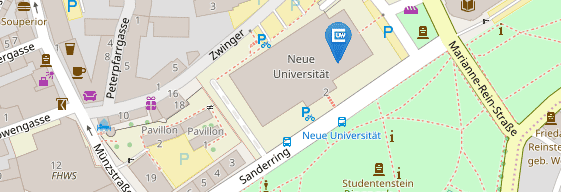

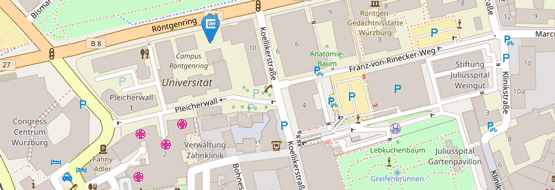

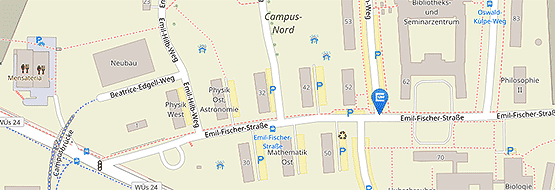

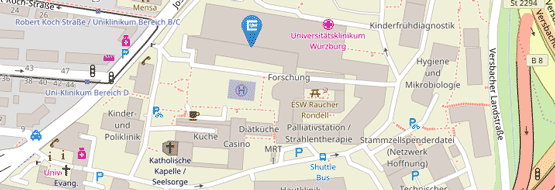

As part of the BMBF-funded legal-informational cooperation project TargetJura (Tutorsystem zu ARGumentationsstrukturen in Ethischen und JURistischen Anwendungen), the RobotRecht research center is working with Prof. Dr. Frank Puppe's Chair of Artificial Intelligence and Knowledge Systems to develop a discussion platform dedicated to the questions raised above. On this platform, discussions on ethical questions of AI take place on the basis of concrete case studies by means of a chatbot. The chatbot is used to promote such ethical discussions and is to ensure that discussion rules are observed and that the most important arguments are addressed in a natural way, without the chatbot representing an opinion that it considers to be correct. A rational style of dialog in an open rather than a goal-oriented discussion is aimed for.

At this point we are looking for participants to chat with our bot to evaluate our latest features. If you are interested, you can register here and we will notify you when a new version of the bot is available!

Scenarios

The topics are broad and the users can decide for themselves on the discussion platform which scenario they want to discuss about the use of AI. The subject of the discussions is not so much the technical developments, but the possible social impact of specialized, competent AI for various areas of autonomous, i.e. not just supportive, decision-making. Such ethical discussions about the benefits and risks of different scenarios are important for opinion formation in a democracy.

The following scenarios will be found on the discussion platform, with the knowledge-based preparation and matching of arguments constantly continuing and evolving.

In civil law, should a legally intelligent program (judAI) decide disputes in the first instance if its judgments are better, i.e., less likely to be corrected on appeal than human judges?

In our scenario, this assumes that AI as a judge is allowed in principle, is a rule-based program provided by the state, and the task of the judges involved is limited to fact-finding.

Targets

Ethical discussions about the possibilities and limits of AI are becoming increasingly relevant with the rapid advancement of this technology. The overall goal of the project is to understand the essential arguments of the dialogue text in order to be able to evaluate them by comparing them with a sample argumentation (or, if necessary, several alternative justifiable solutions).

Methods and interdisciplinary cooperation

The project is only possible in close cooperation between computer scientists and lawyers. The computer scientists develop the methodical basics, while the lawyers take over the content-related elaboration of the discussion arguments.

In ethical discussions, a scenario is described and it is asked which behavior is appropriate. The users are asked to make a statement and justify it. Different options should be discussed and weighed up. If options are missing or arguments are missing, the chatbot adds them.

The following example shows a simplified logical structure with a mind map of the most important arguments:

Ethical question: Is a medical intelligent program (medKI) allowed to make independent diagnosis and therapy decisions if it receives patient data in examination centers from medical professionals - provided it has performed better than specialists in studies?